Publications

Gzip Predicts Data-sensitive Scaling Laws

In Prep for NeurIPS 2024

Rohan Pandey

Uncovering Cross-modal Syntax in Vision-Language Models with Causal Intervention

In Progress

Rohan Pandey, Aryaman Arora, Tristan Thrush, Christopher Potts

Multimodal Learning Without Multimodal Data: Guarantees and Applications

Paul Pu Liang, Chun Kai Ling, Yun Cheng, Alexander Obolenskiy, Yudong Liu, Rohan Pandey, Alex Wilf, Louis-Philippe Morency, Ruslan Salakhutdinov

Towards Vision-Language Mechanistic Interpretability: a Causal Tracing Tool for BLIP

Vedant Palit*, Rohan Pandey*, Aryaman Arora, Paul Pu Liang

WinogroundVQA: Zero-shot Reasoning with LLMs for Compositional Visual Question Answering

In Academic Purgatory

Rohan Pandey, Spandan Das, Tristan Thrush, Paul Pu Liang, Ruslan Salakhutdinov, Louis-Philippe Morency

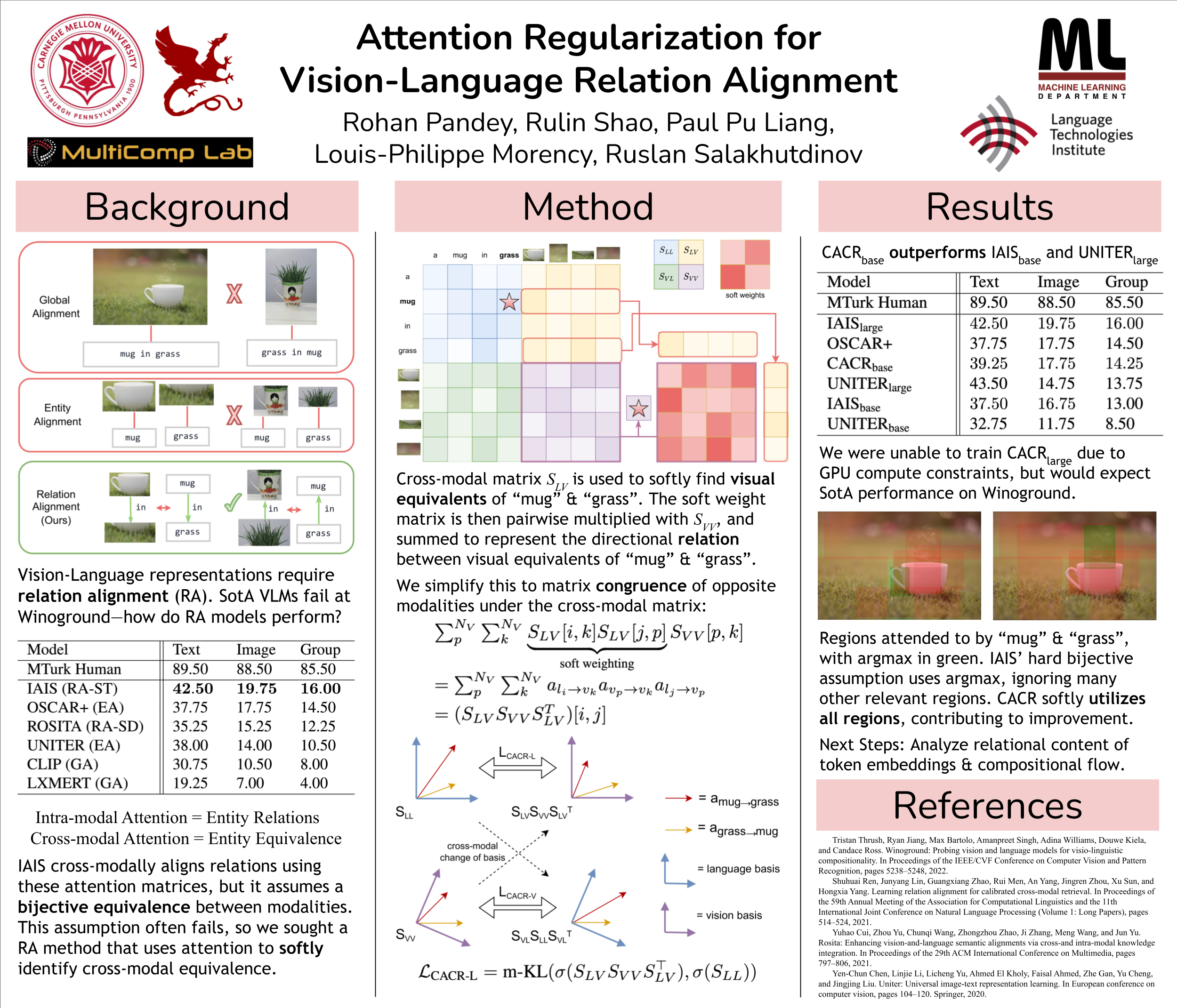

Cross-modal Attention Congruence Regularization for Vision-Language Relation Alignment

Rohan Pandey, Rulin Shao, Paul Pu Liang, Ruslan Salakhutdinov, Louis-Philippe Morency

Syntax-guided Neural Module Distillation to Probe Compositionality in Sentence Embeddings

Rohan Pandey

Does Structural Attention Improve Compositional Representations in Vision-Language Models?

Rohan Pandey, Rulin Shao, Paul Pu Liang, Louis-Philippe Morency

Probing Compositional Representations in Neural Language Models with Semantic Graphs

Preprint, 2022

Rohan Pandey, Uri Alon, Frank Xu, Graham Neubig

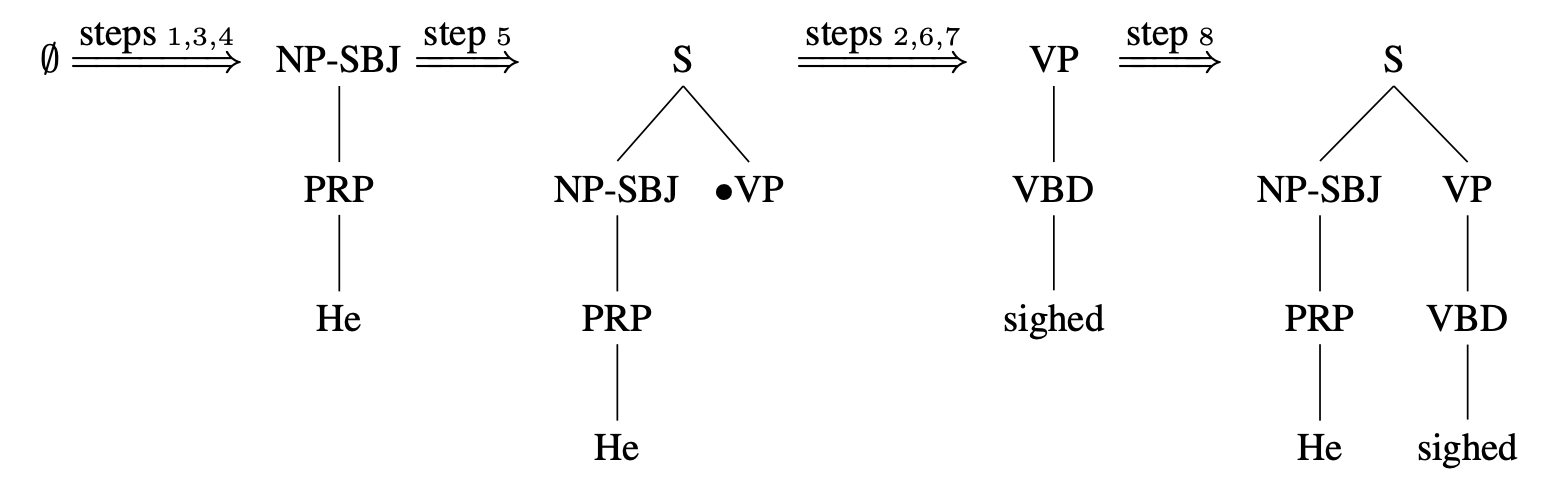

A Family of Cognitively Realistic Parsing Environments for Deep Reinforcement Learning

Adrian Brasoveanu, Rohan Pandey, Maximilian Alfano-Smith

Athena 2.0: Contextualized Dialogue Management for an Alexa Prize SocialBot

Juraj Juraska, Kevin K. Bowden, Lena Reed, Vrindavan Harrison, Wen Cui, Omkar Patil, Rishi Rajasekaran, Angela Ramirez, Cecilia Li, Eduardo Zamora, Phillip Lee, Jeshwanth Bheemanpally, Rohan Pandey, Adwait Ratnaparkhi, Marilyn Walker

Transfer Learning for Mental Health Evaluation from Natural Language

Preprint, 2020

Kamil Kisielewicz, Rohan Pandey, Shivansh Rustagi, Narges Norouzi